Recently, large language models (LLMs), like ChatGPT and Med-PaLM, have generated a lot of buzz in the press and among emergency physicians. LLMs are designed to process large amounts of data, synthesize information, and generate text. These abilities are similar to those performed by emergency physicians.

Explore This Issue

ACEP Now: Vol 42 – No 06 – June 2023Our specialty is a fast-paced, dynamic medical field that demands rapid decision-making and adaptability. But it also entails some mundane, repetitive tasks which demand our time and focus. Advances in artificial intelligence (AI) and the development of LLMs, could assist emergency physicians in their daily practice and research endeavors.

Although publications describing ChatGPT passing the USMLEs and Med-PaLM 2 performing at an expert level are interesting, the implementation of these models also comes with potential risks and downsides.1,2 We need to navigate challenges, foster ethical use, and identify the best practices for emergency department (ED) application. In this article, we explore several ways emergency physicians can utilize LLMs and discuss potential challenges faced.

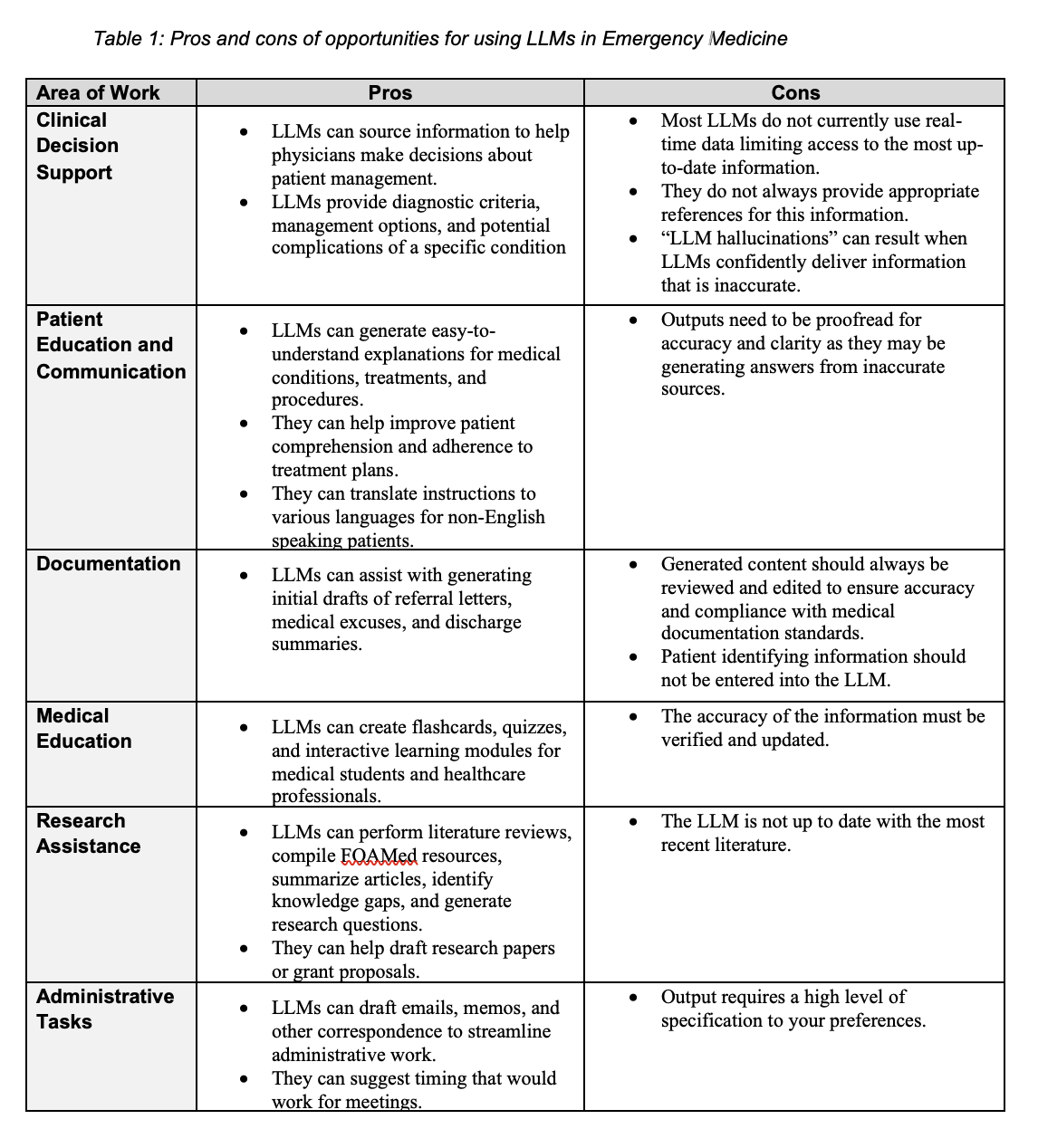

Opportunities for Using LLMs in Emergency Medicine

The role of LLMs in direct clinical care—from triage through discharge—will likely see enormous growth in the near future. LLMs can rapidly source and synthesize medical data for clinical decision support (CDS). They can also summarize patients’ medical histories across unified EHR and highlighting elements which may be relevant to the current presenting condition.

Once clinicians begin to chart and manage a patient, the real fun begins. Not only might LLMs help to complete elements of the note or check off billing requirements, they may also recommend tests or illuminate the presence of diagnostic criteria for specific diseases. Imagine writing a history of present illness report (HPI) while red flags of back pain were being highlighted or further questions suggested. Thus, LLMs may suggest workup and management options. If and when a diagnosis is made, prior similar patient encounters utilized by LLMs may be used to suggest likely trajectories for the condition or possible complications. They may also reduce our administrative burden by assisting in generating initial drafts of referral letters and discharge summaries, freeing up more of our time for direct patient care. Ultimately, emergency physicians may find themselves with an invaluable tool for expedient and accurate decision-making.

Although the role of LLMs in direct patient care is perhaps our first thought, much of what emergency physicians do is about informing patients about how to manage their conditions when they leave the ED. Here, LLMs can significantly enhance patient education and communication. They can generate understandable explanations of medical conditions and procedures, thereby fostering improved patient comprehension and treatment adherence. They can also translate these explanations into various languages, addressing language barriers often faced with non-English speaking patients. Beyond the visit, Doximity’s DocsGPT can be used to draft and submit denial appeals and letters of medical necessity.3

Clinical care is just one of the many responsibilities in emergency medicine. We also have to stay up to date on current best practice. Here, LLMs can create dynamic educational tools, such as quizzes or interactive modules, which might provide an individualized, engaging learning platform for medical trainees as well as seasoned professionals. Furthermore, they are well-suited to perform literature reviews, compile FOAMed resources, and summarize articles. Is something missing from the literature? Does a research opportunity exist to fill a knowledge gap? If so, LLMs can help draft grant proposals or outline papers, offering considerable support for research scientists.

Finally, especially for those emergency physicians who feel like there just are not enough hours in a day, it’s often the administrative tasks which can feel the most burdensome. LLMs will be a growing part of our day-to-day activities. Some email apps already use their functionality to draft routine emails and memos. But they can also suggest meeting times or locations.

Risks of Using LLMs in Emergency Medicine

While the advantages are promising, the adoption of LLMs also poses challenges and potential risks (see Table 1).

First and foremost is the issue of accuracy and reliability. While LLMs are undeniably powerful, they are not immune to generating information that may be inaccurate or outdated. As physicians, we must use our clinical acumen to cross-check and validate the information offered by these AI tools. Not only do we know that not all patients are equally represented in the data sets currently available to deploy LLMs, but we know that the content of this data can be inaccurate. Furthermore, even with literature searches and reviews, some research report over 50 percent of studies cannot be reproduced by their peers or include inaccurate findings.4

Thus, the integration of AI in health care brings a host of ethical questions to the forefront. Not only is it unclear if we can use their results for individual ED patients, there are concerns as to how information is handled when we use them. Additional concerns about obtaining informed consent can supersede even the potential for algorithmic bias. Given time stamps on queries, the nuance of clinical presentations, and privacy concerns, some U.S. hospitals are considering not allowing the use of ChatGPT. Italy has banned ChatGPT in all settings and physicians in Perth, Australia have been banned from ChatGPT due to privacy concerns.5,6

The primacy of patient privacy and data security cannot be overstated. As we deploy AI tools in clinical practice, we must ensure stringent measures are in place to prevent inadvertent data leaks or misuse. Patient trust is paramount in our field, and we must strive to maintain it as we navigate the intersection of health care and AI.

Furthermore, as these tools become more and more ubiquitous, we may run the risk of becoming overly reliant on them. These tools are designed to assist—not replace—human judgment. The risk of delegating too much responsibility to these models can undermine our clinical acumen, potentially leading to worsened patient outcomes and greater inequity in care. As tempting as it may be to offload administrative tasks, physicians need to remember that LLMs should not depersonalize care and are not suited to overtake the physician’s role in documentation each patient’s unique story and the medical decision-making process that guides their care.7

It’s crucial to remember that as exciting as the adoption of LLMs in emergency medicine might be, their integration requires a balanced and cautious approach. Balancing their utility with the inherent challenges they present will be key to ensuring we use them effectively and ethically.

Best Practices for LLM in Emergency Medicine

As we integrate LLMs into our fast-paced emergency departments, several key points can help ensure a successful transition.

- LLMs should be viewed as tools to enhance—not replace—our clinical judgment and expertise. We should pinpoint clear objectives that we hope to achieve with this AI support. This could range from gaining faster access to evidence-based literature, translating patient instructions into diverse languages, and bolstering our literature reviews and educational initiatives.

- With the introduction of AI tools comes the necessity for targeted training and education. We, as emergency physicians, need to be fully aware of the limitations of these tools. It’s crucial to accurately interpret their outputs and always recognize instances when our own human judgment should be the final call.

- Privacy and security of data are paramount in this digital age. We need to ensure strict compliance with regulations like Health Insurance Portability and Accountability Act (HIPAA), and provide secure storage and handling of sensitive patient information.

- Even though LLMs come with impressive capabilities, we need to be mindful of their potential for error. Regular monitoring of their performance and accuracy is a necessary step in ensuring their reliability as we integrate them into our demanding roles.

- Moreover, aligning our practices with professional organizations and regulatory bodies is essential. We need to work together to establish comprehensive guidelines that address the legal and ethical implications of LLM use in medicine. For instance, the American Medical Informatics Association (AMIA) offers a set of guidelines for ethical AI use which can serve as a reference point.

It’s important to remember that LLMs, despite their numerous advantages, are tools. They are not substitutes for our clinical judgment or expertise. As we cope with increasing patient load presenting with complex conditions, additional help is always welcome. However, we should adopt a “cautiously optimistic” approach to using LLMs as a supplement, not a panacea, to tap into their potential while ensuring we deliver the highest quality of patient care and maintain safety.

References

- Kung TH, Cheatham M, Medenilla A, et al. Performance of ChatGPT on USMLE: Potential for AI-assisted medical education using large language models. PLOS Digit Health. 2023;2(2):e0000198. Published 2023 Feb 9.

- Singhal K, Tu T, Gottweis J, et al. (2023). Towards expert-level medical question answering with large language models. arXiv preprint arXiv:2305.09617.

- Landi H. Doximity rolls out beta version of chatgpt tool for docs aiming to streamline administrative paperwork. Fierce Healthcare. https://www.fiercehealthcare.com/health-tech/doximity-rolls-out-beta-version-chatgpt-tool-docs-aiming-streamline-administrative

- Ioannidis JP. Why most published research findings are false [published correction appears in PLoS Med. 2022 Aug 25;19(8):e1004085].PLoS Med. 2005;2(8):e124.

- McCallum S. CHATGPT banned in Italy over privacy concerns. BBC News. https://www.bbc.com/news/technology-65139406

- Moodie C. “Cease immediately”: doctors forbidden from using artificial intelligence amid patient confidentiality concerns. ABC News. https://www.abc.net.au/news/2023-05-28/ama-calls-for-national-regulations-for-ai-in-health/102381314

- Preiksaitis C, Sinsky CA, Rose C. ChatGPT is not the solution to physicians’ documentation burden [published online ahead of print, 2023 May 11]. Nat Med. 2023;10.1038/s41591-023-02341-4.

Pages: 1 2 | Single Page

No Responses to “Leveraging Large Language Models (like ChatGPT) in Emergency Medicine: Opportunities, Risks, and Cautions”